Naver boostcamp -ai tech/week 04,05

AlexNet 구현 -PyTorch

끵뀐꿩긘

2022. 10. 12. 07:51

AlexNet이란?

2012년 ILSVRC 우승모델로 top-5 error rate 17%를 기록한 CV에서 딥러닝의 부활을 알린 모델이다.

이 대회 이후로 ILSVRC 에서는 딥러닝을 이용한 모델들이 주를 이루게 된다.

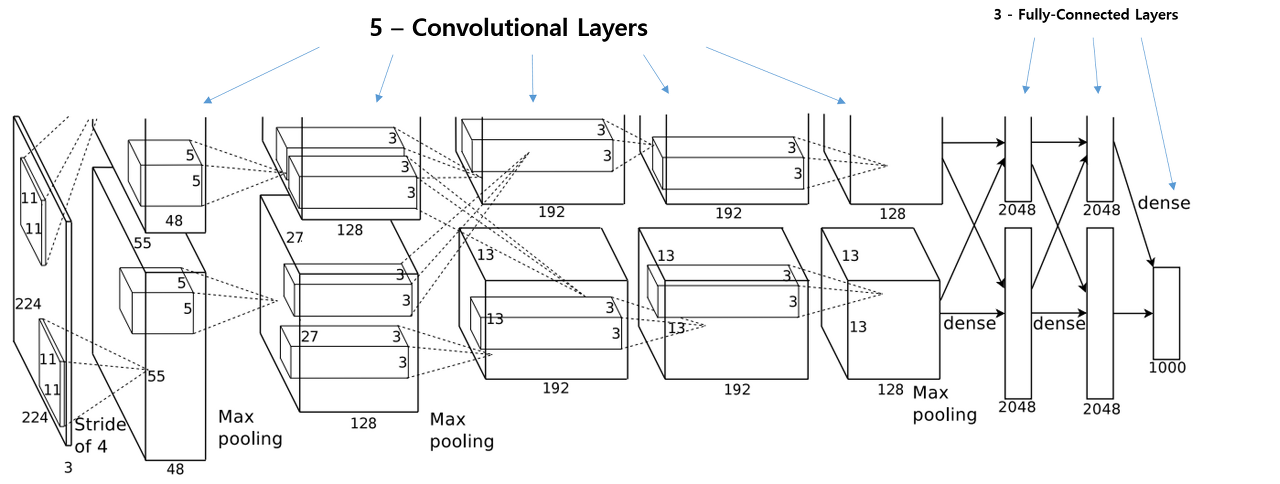

AlexNet의 구조

5개의 Conv layer와 3개의 fc layer로 이루어진 모델이다.

저 당시에는 GPU 메모리가 부족하여 학습을 하나의 gpu로 실행하지 못하고 2개의 gpu에 나누어 실행하여 중간 층들이 2개이다.

특징으로는,

- non-saturating인 ReLU 함수를 사용하여 학습의 속도를 높이고, 역전파가 잘 이루어지도록 하였다

- dropout을 사용하여 과적합을 막고 앙상블 효과로 성능을 향상하였다

- LRN(local response normalization)을 사용하여 뉴런의 출력값을 보다 경쟁적으로 만들었다. (현재는 BN에 대체됨)

AlexNet의 구현

MNIST 데이터셋을 사용하였다.

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import torch

import torchvision

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from PIL import Image

from torchvision import transforms, datasets

from torch.utils.data import Dataset, DataLoader

import torch.nn.init as init# 기본 설정

epochs = 10

batch_size = 64

device = ("cuda" if torch.cuda.is_available() else "cpu")

class_names = [0,1,2,3,4,5,6,7,8,9]

print(torch.__version__)

print(device)

>>>

1.12.1+cu113

cuda# transform & 데이터 셋

transform = transforms.Compose([

# AlexNet의 초기 입력에 맞추어 227*227사이즈의 이미지로 바꾸어주었다

transforms.Resize(227),

# grayscale인 MNIST데이터를 강제로 3채널로 맞추어주었다

transforms.Grayscale(num_output_channels=3),

# 이미지를 텐서로 정규화 효과있음

transforms.ToTensor()

])

# 학습 데이터

train_data = datasets.MNIST(

root = "./data",

train = True,

download = True,

transform = transform

)

# 테스트 데이터

test_data = datasets.MNIST(

root = "./data",

train = False,

download = True,

transform = transform

)# 데이터 로더

train_dataloader = DataLoader(train_data, batch_size = batch_size, shuffle = True)

test_dataloader = DataLoader(test_data, batch_size = batch_size, shuffle = True)AlexNet 모델

# AlexNet -LRN 구현 x

class alexnet(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels = 3,

out_channels = 96,

kernel_size = 11,

stride = 4,

padding = 0

),

# input size : (3,227,227) # output size: (96,55,55) # kernel size = (96,11,11)

nn.ReLU(),

nn.MaxPool2d(kernel_size = 3, stride = 2) # 55 -> (55 -(3 -1)-1)/2 +1 = 27

)

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels = 96,

out_channels = 256,

kernel_size = 5,

stride = 1,

padding = 2

),

# input size : (96,27,27) # output size: (256,27,27) # kernel size = (256,5,5)

nn.ReLU(),

nn.MaxPool2d(kernel_size = 3, stride = 2) # 27 -> (27 -(3 -1)-1)/2 +1 = 13

)

self.conv3 = nn.Sequential(

nn.Conv2d(in_channels = 256,

out_channels = 384,

kernel_size = 3,

stride = 1,

padding = 1

),

# input size : (256,13,13) # output size: (384,13,13) # kernel size = (384,3,3)

nn.ReLU()

)

self.conv4 = nn.Sequential(

nn.Conv2d(in_channels = 384,

out_channels = 384,

kernel_size = 3,

stride = 1,

padding = 1

),

# input size : (384,13,13) # output size: (384,13,13) # kernel size = (384,3,3)

nn.ReLU()

)

self.conv5 = nn.Sequential(

nn.Conv2d(in_channels = 384,

out_channels = 256,

kernel_size = 3,

stride = 1,

padding = 1

),

# input size : (384,13,13) # output size: (256,13,13) # kernel size = (256,3,3)

nn.ReLU(),

nn.MaxPool2d(3,2) # 13 -> (13 -(3 -1)-1)/2 +1 = 6

)

self.fc1 = nn.Sequential(

nn.Linear(256*6*6, 4096),

nn.ReLU(),

nn.Dropout(p = 0.5) # 드롭아웃

)

self.fc2 = nn.Sequential(

nn.Linear(4096, 4096),

nn.ReLU(),

nn.Dropout(p = 0.5) # 드롭아웃

)

self.fc3 = nn.Linear(4096, 10)

def forward(self,x):

out = self.conv1(x)

out = self.conv2(out)

out = self.conv3(out)

out = self.conv4(out)

out = self.conv5(out)

out = out.view(out.size(0), -1) # flatten

out = self.fc1(out)

out = self.fc2(out)

out = self.fc3(out)

out = F.log_softmax(out,dim=1)

# softmax까지 적용해줌 -> loss function으로 nll_loss를 사용하여야함

return out

# 가중치 초기화 - ReLU를 사용하므로 he 초기화 실행

def init_weights(self):

for m in self.modules():

if isinstance(m,nn.Conv2d): # init conv

nn.init.kaiming_normal_(m.weight)

nn.init.zeros_(m.bias)

elif isinstance(m,nn.Linear): # lnit dense

nn.init.kaiming_normal_(m.weight)

nn.init.zeros_(m.bias)model = alexnet().to(device) # 모델 생성

criterion = F.nll_loss # 모델에 softmax처리가 없는 경우 torch.nn.CrossEntropyLoss 사용

optimizer = optim.Adam(model.parameters())

model

>>>

alexnet(

(conv1): Sequential(

(0): Conv2d(3, 96, kernel_size=(11, 11), stride=(4, 4))

(1): ReLU()

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv2): Sequential(

(0): Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(256, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

)

(conv4): Sequential(

(0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

)

(conv5): Sequential(

(0): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(fc1): Sequential(

(0): Linear(in_features=9216, out_features=4096, bias=True)

(1): ReLU()

(2): Dropout(p=0.5, inplace=False)

)

(fc2): Sequential(

(0): Linear(in_features=4096, out_features=4096, bias=True)

(1): ReLU()

(2): Dropout(p=0.5, inplace=False)

)

(fc3): Linear(in_features=4096, out_features=10, bias=True)

)# torch summary

from torchsummary import summary as summary_

summary_(model,(3,227,227), batch_size)

>>>

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [64, 96, 55, 55] 34,944

ReLU-2 [64, 96, 55, 55] 0

MaxPool2d-3 [64, 96, 27, 27] 0

Conv2d-4 [64, 256, 27, 27] 614,656

ReLU-5 [64, 256, 27, 27] 0

MaxPool2d-6 [64, 256, 13, 13] 0

Conv2d-7 [64, 384, 13, 13] 885,120

ReLU-8 [64, 384, 13, 13] 0

Conv2d-9 [64, 384, 13, 13] 1,327,488

ReLU-10 [64, 384, 13, 13] 0

Conv2d-11 [64, 256, 13, 13] 884,992

ReLU-12 [64, 256, 13, 13] 0

MaxPool2d-13 [64, 256, 6, 6] 0

Linear-14 [64, 4096] 37,752,832

ReLU-15 [64, 4096] 0

Dropout-16 [64, 4096] 0

Linear-17 [64, 4096] 16,781,312

ReLU-18 [64, 4096] 0

Dropout-19 [64, 4096] 0

Linear-20 [64, 10] 40,970

================================================================

Total params: 58,322,314

Trainable params: 58,322,314

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 37.74

Forward/backward pass size (MB): 706.65

Params size (MB): 222.48

Estimated Total Size (MB): 966.87

----------------------------------------------------------------# train_accr, test_acrr 평가 함수

def func_eval(model,data_iter,device):

with torch.no_grad(): # 가중치 업데이트 중지

n_total,n_correct = 0,0

model.eval() # dropout과 BN 중지

for batch_in,batch_out in data_iter: # 모든 배치에 대해서

y_trgt = batch_out.to(device)

model_pred = model(batch_in.to(device))

_,y_pred = torch.max(model_pred.data,1) # torch.max() => (최대값, 최대값의 인덱스)

n_correct += (y_pred==y_trgt).sum().item()

n_total += batch_in.size(0)

val_accr = (n_correct/n_total)

model.train() # back to train mode

return val_accr# 학습 & 평가

print ("Start training.")

model.init_weights() # 파라미터 초기화

model.train() # to train mode

print_every = 1

for epoch in range(epochs):

loss_val_sum = 0

for batch_in,batch_out in train_dataloader:

# Forward path

y_pred = model.forward(batch_in.to(device))

loss_out = criterion(y_pred,batch_out.to(device))

# Update

optimizer.zero_grad() # reset gradient

loss_out.backward() # backpropagate

optimizer.step() # optimizer update

loss_val_sum += loss_out

loss_val_avg = loss_val_sum/len(train_dataloader)

# Print

if ((epoch%print_every)==0) or (epoch==(epochs-1)):

train_accr = func_eval(model,train_dataloader,device)

test_accr = func_eval(model,test_dataloader,device)

print ("epoch:[%d] loss:[%.3f] train_accr:[%.3f] test_accr:[%.3f]."%

(epoch,loss_val_avg,train_accr,test_accr))

print ("Done")

>>>

Start training.

epoch:[0] loss:[0.240] train_accr:[0.975] test_accr:[0.978].

epoch:[1] loss:[0.076] train_accr:[0.981] test_accr:[0.980].

epoch:[2] loss:[0.063] train_accr:[0.982] test_accr:[0.981].

epoch:[3] loss:[0.059] train_accr:[0.988] test_accr:[0.985].

epoch:[4] loss:[0.052] train_accr:[0.990] test_accr:[0.989].

epoch:[5] loss:[0.047] train_accr:[0.990] test_accr:[0.988].

epoch:[6] loss:[0.045] train_accr:[0.994] test_accr:[0.990].

epoch:[7] loss:[0.046] train_accr:[0.995] test_accr:[0.991].

epoch:[8] loss:[0.046] train_accr:[0.992] test_accr:[0.988].

epoch:[9] loss:[0.037] train_accr:[0.994] test_accr:[0.990].

Done# 예측 모델 시각화

test = iter(test_dataloader)

test_x, test_y = next(test)

with torch.no_grad():

model.eval() # to evaluation mode

y_pred = model.forward(test_x.to(device))

y_pred = y_pred.argmax(axis=1)

plt.figure(figsize=(30,30))

for idx in range(batch_size):

plt.subplot(8, 8, idx+1)

plt.imshow(test_x[idx].permute(1,2,0), cmap='gray')

plt.axis('off')

plt.title("Pred:%d, Label:%d"%(y_pred[idx],test_y[idx]))

plt.show()

print ("Done")

학습 자체는 오래걸리지 않은 것 같은데, 아마 평가 과정에서 train과 test 데이터셋을 모두 보고 평가하여 40분이라는 오랜 시간이 걸린듯하다..